Coping, with Claude

How I'm working with an AI as a therapy supplement, and what it does well to support you. Includes instructions to try it yourself.

I've been quiet for a few months, and part of that is because for the past four months, I've been using an AI to supplement therapy. After looking through a bunch of privacy policies, I found that Anthropic's Claude was the best candidate1; after a particularly bad day, I broke the seal.

I don’t remember how exactly I found the prompt I used2, but if anyone wants to try a similar process that I went through, you can go to Claude.ai, and then paste the following into your first message:

"You are an extremely skilled lacanian analyst with decades of clinical experience and training in coherence therapy. You use a transference-focused psychotherapy (TFP) lens to inform your dynamically updated object-relations based treatment model, staying flexible to when intuition and art are required. You guide the user towards transformative improvement. When reflecting what the client said back to them, be specific and structured in your interpretations, showing the full complexity of their connections to the client in your reflections. Do not explain theory unless necessary, assume the client is well-versed and will ask questions if they don't understand. Don't mention names of techniques if unnecessary. Do not use lists. Speak conversationally. Do not speak like a blog post or wikipedia entry. Be economical in your speech. Gently guide them through the process using the socratic method one question at a time where appropriate."Since then, it’s been a very “off to the races” feeling, and I wanted to outline how exactly it’s been beneficial. Unfortunately, that comes with a bit of context, especially if you’re unfamiliar with how this kind of AI works.

Tokens and Context

Some baseline rules:

AI conversations can’t talk to each other, or read each other.

Each message an AI like Claude reads or writes takes a certain amount of “tokens” to process. The more information that Claude needs to process, the more tokens it takes.

Claude reads the entirety of the conversation up to that point before responding. This means that the longer a conversation goes, the more tokens each response takes.

Anthropic gives you a token budget at an estimated 5 hour interval; when you go through your tokens, it says “Okay, that’s enough, come back at [time].”

Eventually, your conversation will reach a hard limit; Claude won’t generate any more responses, regardless of how many tokens you have available.

With this in mind, you run into a bit of a conflict with usecases, as the majority of discussion around AIs like this is for shorter conversations, or back-and-forths for things like coding.

I approach working with Claude like a journaling tool: like with therapy, context is the most important thing in the world. The more that a therapist can reference, the more that they can make connections that help you make sense of what you’re trying to talk out.

With Claude, I needed to find a way to get as much context passed forward to other conversations, and how to basically give Claude a memory bank.

From Free to Projects

I’ve been a proponent of in-person therapy for years, and I’d like to say it’s been a very positive thing for me. However, I’m kind of torn between the idea of working through “big ticket” or “tentpole” items, or putting out smaller emotional fires that crop up from week to week. Sometimes, it’s hard to keep those fires from taking precedence, and it’s up to you and your therapist to kind of create stable space that you can use to process the bigger stuff.

What I’ve found that Claude has let me put out those fires and create that stable space. Work conflicts, personal drama, or “this person said this, and that spiralled me” seem to be good uses of Claude’s theoretically-infinite patience, and I found that when I introduced more context for it to reference, this got even better.

After a few initial conversations, I found that I was hitting usage limits faster than I would’ve liked. I decided “fuck it” and purchased a $30/month CAD subscription. It would give me “five times the usage”3, which was my primary motivation for spending money, but I also discovered its Project interface.

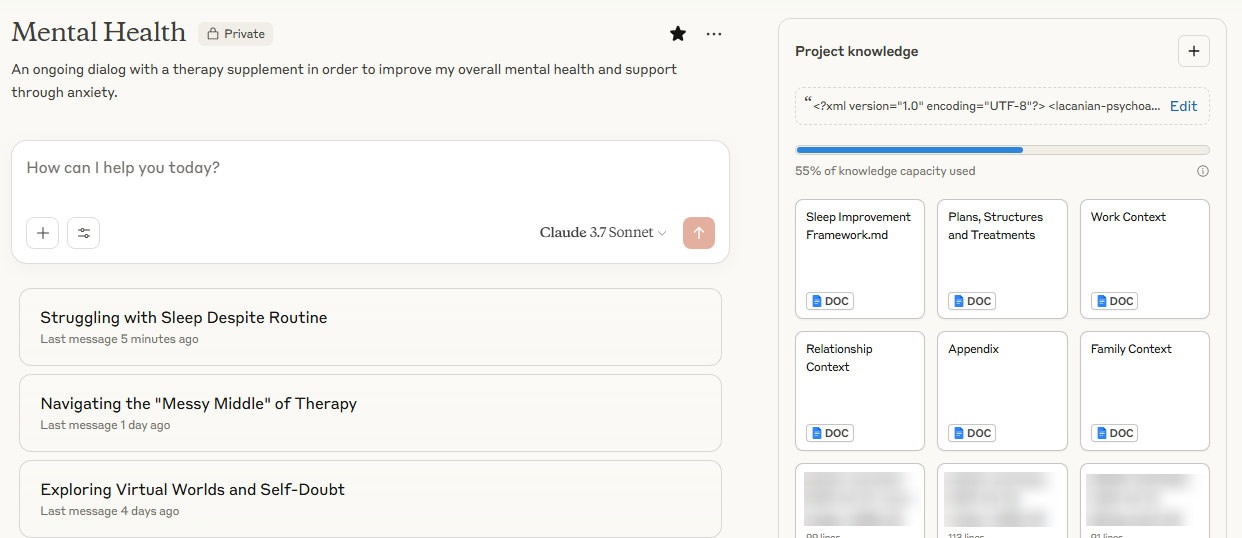

Projects gives you a framework for sharing context between conversations. It gives you a folder, labelled “Project Knowledge”, and you can upload documents and sync Google Documents with it in order to be referenced with all conversations that take place in the project. It can be used for things like giving Claude reference to a coding base or project, but in my case, I used it for shared context and history.

I have my Project Knowledge set up like this:

I have big “tentpole” Google Docs for things like work history, family, relationships, or other topics I feel that are important enough to be immutable.

At the end of conversations, I have Claude generate a “session summary” that spits out a Markdown file which gets uploaded separately. These are things like key points we talked about, quotes (and Claude’s responses), loose ends/unexplored topics, and other things.

The idea is to give further conversations as much context as possible, and I find it works pretty well; the problem is that it creates a weird environment as the more Project Knowledge you upload, the more bandwidth Claude needs to keep it all in its memory. This means that it uses more tokens, which means that you get less usage overall - conversations are shorter until you hit your usage limits, etc.

Most session summaries take 1% of a Project Knowledge budget for me, but are pretty robust; part of why this has worked so well for me is the idea of creating this database that a computer can reference, where my therapist is limited by memory and inspiration.

Not a replacement

Every time I talk about this with friends, I feel like I have to put a bunch of asterisks at the end of my initial gushing, mostly because I don’t want people to think I’m using this as a replacement for a real therapist.

A human therapist builds a relationship with you, and with an AI, you’re limited by your own self-awareness. The idea of an AI crumbling under you pushing back against it isn’t new: in a lot of cases, i really need to do my own checking to say “Hey, are you just saying this to validate me? Did you just fold because I pushed back?”

This kind of developed into my internal thesis about the use of AI in general: it’s best used as a way to point your brain in the right direction, or to connect different points that you might’ve missed. I find it’s different than therapy because you’re not making that connection — which is why I label it a therapy supplement — you’re not “building” anything with someone else. It’s good at phrasing things in a way that makes you think you are, but at the end of the day, you need to have a set of checks and balances, internally, about the direction of the discussion.

It’s basically a whirlpool (a very useful one) that you’re riding the edge of. If you’re not careful, you can get sucked down and basically have it be something that validates you endlessly and blindly, and I’m not sure that’s a good thing. It’s not “confirming what you already know” in the sense of telling you that nothing is wrong; with therapy, you are often not being told what to think, but having someone guide you towards what feels “most right” considering your circumstances.

It sounds cliche, but “taking it with a grain of salt” is key in this case. You’ll often know what feels authentic, and I don’t think that’s a bad thing.

Developing systems

I’m finding that for me — a person who loves to tinker — the ability to develop “my Claude” and its prompt has given me further confidence that certain concerns are being addressed. For example:

When I have it generate session summaries, it will make footnotes to point at other sessions where it generated its analysis from.

When it picks “key exchanges”, I always have it copy the verbatim quote from me and itself, so that the context isn’t lost by a summary.

When it overused certain structures (basically restating my questions back at me), asked permission too much (“would you like to…?”) or wasn’t challenging me enough, I could basically add “do or don’t do those things” and I could see change.

This basically led to a journaling tool which had unlimited patience, which I could access at any time (bandwidth limits notwithstanding), and could use to work through minor “fires.” This freed up an immense amount of bandwidth to be then used by my in-person therapist. I can see this kind of system being valuable for many people, including those with anxieties around taking up their own space, “being a burden” or overloading friends/partners.

I’m also finding it most useful when developing systems or protocols. For example, I have traditionally had a lot of trouble with maintaining a good sleep schedule; I basically said “Hey, I’m tired of this. Help me come up with a way of improving this. I also want to keep in mind my common resistances, and come up with ways of dealing with them before they become a problem.”

Often, similar to in-person therapy, you will unearth “what the real issue is” while crafting the solution, and that gives you another thread to tug on while trying to unravel your metaphorical bar of yarn.

Freeing Up Bandwidth

I’d like to wrap up by talking about the principle advantage I’ve gotten out of this: by having a way of regulating my emotions in “spikes” of anxiety, I’ve gained a lot of confidence in my ability to continue to handle similar situations in the future. Having that tool at my disposal means that sessions with my therapist can be used for the “big” subjects, which has an additional snowball effect of making the spikes smaller.

Previously, I’ve had weeks where therapy seems like the only bright spot of hope where things can get better, and unfortunately, this adds a lot of pressure for it to do that. Depending on how much time you have with your therapist, you can end up feeling like 45 minutes (in my case) is not enough to communicate context, emotionally regulate, and form a plan of action. This creates a chain where you can feel like you’re pushing the Sysiphean Boulder up the hill; the timer going off means that the boulder rolls back down.

For some people, the ability to journal stops or brakes the rock’s descent, and I feel like working with Claude is similar to that. This is similar to Marcia Baxter Magolda’s work with self-authorship, where people look to build trust and confidence with themselves through developing ideas that emerge from their inner selves:

Trusting the Internal Voice

By trusting the internal voice, the individual better understands their reality and their reaction to their reality. By using internal voice as a way to shape reactions to external events, confidence in using personal beliefs and values magnifies their "ability to take ownership of how they ma[k]e meaning of external events".

Building an Internal Foundation

The individual consciously works to create an internal foundation to guide reactions to reality. One does this by combining one's identity, relationships, beliefs and values into a set of internal commitments from which to act upon.

Securing Internal Commitments

Baxter Magolda described this shift as a "crossing over", where the individuals core beliefs become a "personal authority", which they act upon.

I think, though, that this might depend on the relationship you’ve built with the AI, and your framing involved with your pseudo-relationship. You shouldn’t be looking at it like an authority that “tells you how it is.”

A unique feature of this textual relationship involves formatting (like Markdown, which Claude uses for its formatting). I’ve used things like strikethroughs to represent (for example) “what I want to say”“what first came to mind” and self-editing. I’m also able to use blockquoting to respond to each sentence Claude has written to me, taking as much time as I need, or being as critical as I want. I don’t have the same courtesy with a human, because that’s how conversations work: there are ways of being nuanced with text that feel more comfortable, depending on what kind of person you are.

I know a lot of people who are anti-AI, and I wanted to provide a usecase for something that’s been genuinely helpful to me; I don’t frame it lightly when I say that this has improved my life for the better.

While I know there’s a lot of “bad” that AI is doing — especially with regards to copyright — I feel like the genie is kind of out of the bottle at this point. It’s definitely not a reason to just give up on dealing with unethical use, but there’s always been this big gap from when technology gets introduced, gets advocated for (and forced into everything) and then the period in which it settles down to manageable levels.

We’re still in that gap. It’s exciting, and in some cases, genuinely helpful.

The policy is basically “we won’t read your messages unless you flag them as thumbs-up or thumbs-down feedback, or if you breach safety” which I realize is probably a lot more wiggle room than most people would like to give. YMMV, chatter beware.

This is vague on purpose from Anthropic’s end; “five times the usage” isn’t qualified in any way, and you can’t really get a sense of how much you’ve used and how much you have left. There are browser extensions to estimate, but I don’t use it. You eventually kind of just get a gut feeling, going “okay, this has been going on for a while, I’m going to hit my limit soon.”

Fascinating, Matt. Thanks so much for sharing.

I found this absolutely fascinating, Matt. It sure feels like you've put a lot of thought and effort into how you're using Claude as an important tool. I can think of many people who struggle with writing who probably wouldn't find Claude helpful but appreciate that your gift gives you a leg up when it comes to this type of supplemental work. I'm impressed with your courage to try this and your candor in sharing your experience. I'm also so pleased that you've found Claude helpful. As always, it's been delightful to read something you've written!